Automatic Perception and Target Detection in LiDAR Data

Automatic Perception and Target Detection in LiDAR Data

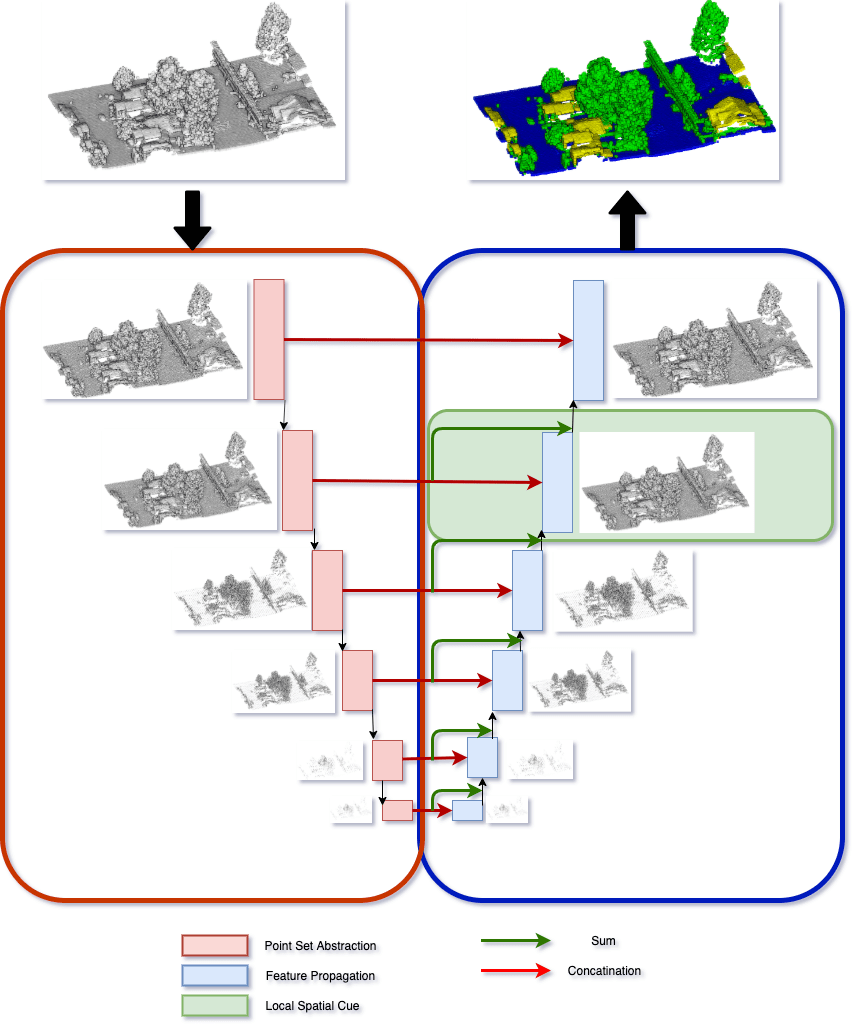

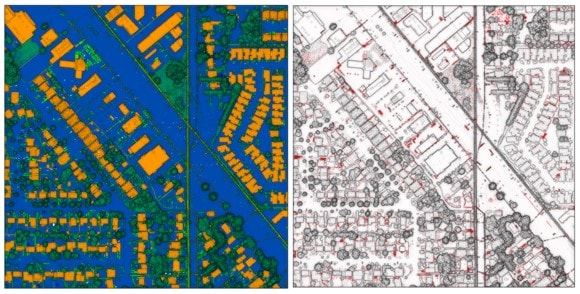

DeepLiDAR

As deep learning techniques have nearly mastered interpreting simple images, there is a surge of interest in adapting machine machine learning beyond data sets that are perceptible to the human eye. Due to its high collection cost and lack of available labeled data, there is a dearth of research into deep learning with aerial LiDAR. We propose a novel deep learning algorithm called CueNet, which can perform semantic segmentation in aerial LiDAR point clouds. We use PointNet++ as the foundation of our architecture. By introducing an extended field of view, we can provide more contextual information to our network and increase the amount of available global information which is necessary for aerial data. We add additional depth to our network, which also makes use of additional residual connections to strengthen the local spatial information. Our CueNet is tailored for aerial LiDAR and outperforms the mean IOU of the traditional PointNet++ by over 7%. Upcoming research will be focused on developing a method for performing instance segmentation, providing bounding boxes to identify individual objects, within our eight classes.

Goals

- Semantic segmentation for eight classes of objects in aerial LiDAR: ground, buildings, vegetation, cars, trucks, poles, power lines and fences.

- Instance segmentation to provide bounding boxes to identify individual objects within the scene.